Engineers Debate: What Sends Troubleshooting in the Wrong Direction?

Today, we have a more philosophical discussion of troubleshooting in place of a specific case history. In a recent set of discussions, a younger engineer raised the question, “Is data analytics useful for troubleshooting?”

The context of this caused a pause.

The real question was, “Is data analytics the most important thing in troubleshooting?”

Experienced troubleshooters proposed that understanding the problem and applying fundamentals was a more appropriate response.

For myself, the initial response was: define your objectives.

What do you want the plant to make, and from what do you want to make it? Now, understanding the problem part of troubleshooting overlaps with defining your objectives. Depending upon how you think, they may mean the same thing.

I don’t intend to run down data analytics. Troubleshooting includes analyzing data. However, there are multiple ways to do this, and an overall term like data analytics does not tell you much.

Troubleshooting requires a series of steps to solve a problem, all of which must be successful. Since they must all be successful, it’s hard to say which is most important. If any step fails, the entire troubleshooting effort fails.

After some thought, I refined the initial question to a more important one: Which step causes the most failures in troubleshooting problems? This is a much more helpful question, so now we can focus on making troubleshooting more effective.

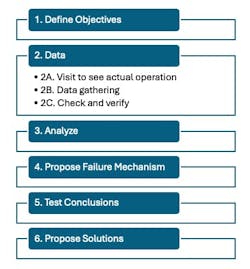

Over time, my approach to troubleshooting has changed. Currently, my pattern of thinking most closely follows the outline in Figure 1. This shows six major steps. All are broken down into multiple tasks, but Figure 1 only breaks down one area (Data).

Based on experience and observation, the two areas most likely to cause troubleshooting to fail are (1) Define Objectives and (5) Test Conclusions.

Defining objectives includes understanding what you want the process to do. This includes knowing both the feeds and products and the basic route from one to the other. For defining objectives, products include environmental emissions and discharges, “unwanted” streams, and the “normally” defined products.

Objectives will also include capacity, efficiency, reliability and safety factors, as well. Too often, troubleshooting starts with assumed objectives without checking if these assumptions are valid. Perfunctory objective analysis runs a high probability of sending troubleshooting in the wrong direction.

Testing conclusions includes three major components:

- Does the proposed failure mechanism explain everything the plant sees?

- Do we see everything that the proposed failure mechanism should make occur?

- Can we predict how plant performance will change when operating conditions are changed?

Failure to test proposed failure mechanisms is a major source of failed troubleshooting efforts.

Coming back to data analytics, they are a tool—potentially a useful one—but they are not a sinecure that replaces understanding your objectives and the process.

About the Author

Andrew Sloley, Plant InSites columnist

Contributing Editor

ANDREW SLOLEY is a Chemical Processing Contributing Editor.